From a programming perspective, there are a number of different ways of caching data available in .NET that can be useful for different purposes. There are the cache classes:

“HttpRuntime.Cache”

and

“HttpContext.Current.Cache”

that can be used to cache objects. There are minor differences between the two, but a good argument for using the former can be found here:

http://weblogs.asp.net/pjohnson/archive/2006/02/06/437559.aspx

So, now you have your in-built caching class, what’s wrong with calling:

HttpRuntime.Cache.Insert(“SomeKey”, someObject)

for all your cacheable web objects?

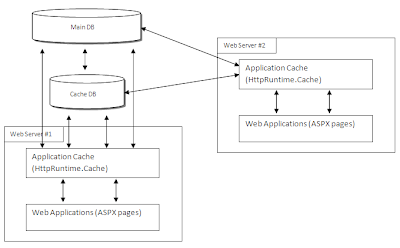

The problem lies in what happens when you move your application from a single web server environment to a dual server, multi-server or web farm environment. The output of data requests made to one server will be cached on that server, but there is no guarantee that the next request made for the same data will be made to the same web server in the web farm, which will mean another trip to the database and a re-caching of the data on the new server, and so on:

Our solution is to have 2 levels of caching, the ASP.NET memory cache implemented using the HttpRuntime.Cache class and a DBCache, which serialises objects and stores them in our Cache database.

By having a dual layer approach, we can access the raw data in our database for the first time we use the data object, then we can add the resulting object into the DBCache and the MemoryCache. If the users next request for the same object happens on the same webserver as before, they get the object directly from the IIS inProc cache (HttpRuntime). If the same request happens on a different web server in the server farm, the users request will come pre-generated from a de-serialisation of the object in the DBcache (faster than accessing the raw data tables in the main database and re-computing the object). This web servers Application cache is now populated with the same data object so next time it is requested at this webserver the response is direct from the memory cache.

We generally use this dual caching for generated objects shared by many users. This means that the first user request will be cached and all further requests by any user will be served from the caches.

An example in our case would be for when a user requests a 14 Day moving average of Microsoft stock (MSFT) for the last 5 years. The first request takes the raw Price data from our Prices table and computes an array of doubles representing the MA value for each day over the last 5 years. It is very likely that another user will want to calculate the same values (or or portion thereof, but how we handle that from our cache is a different story!) so we serialise the double array and store it in the caches. A subsequent request for the same calculation will not require a trip to the large Price data table or any computation, the only question is whether or not the request is fulfilled by the IIS cache on the web server or from our DB cache.

We store all the DB Cache data on our “CacheServer”, which is a separate physical server running a copy of SQL Server (i.e. independent of our main SQL Server). Incidentally, you don’t need a full enterprise edition of SQL Server for the Cache server as SQL Express edition has more than enough power and capacity for the needs of the cache, and it’s free.This approach also has the added benefit of allowing us to persist our cache for as long as we want. The cache is not destroyed when a web server restarts for example, and we can add on additional web servers very easily, with the knowledge that they will have instant access to a long history of cached objects generated from the web applications that have been running on the other web servers.

LRU Policy:

Finally, we have an LRU policy for the DB Cache (the LRU policy is natively implemented by the IIS cache, though it’s not obvious from the documentation). We have a cache monitoring service that runs on our cache server and will automatically remove any items that are past their “expiry” date. Upon addition of new items, if there is not enough “room” in the cache, then the least recently used item is removed from the cache. The LRU policy on the SQL server cache is handled by storing the keys in the cache table ordered by how recently they were used. We have a column in the cache table that is always ordered from least recently used to most recently used. E.g. Upon accessing a row in the cache table:

So, removing the least recently used item means deleting the row at position 1, and decrementing the LRU column in remaining rows by 1. (In practice when we hit an LRU operation, we delete a large number of rows to prevent us constantly having to update rows on insertion of new items).

Further Reading:

http://www.c-sharpcorner.com/UploadFile/raj1979/Caching12312007041352AM/Caching.aspx

http://msdn2.microsoft.com/en-us/library/xsbfdd8c.aspx

http://weblogs.asp.net/justin_rogers/archive/2004/10/23/246745.aspx

Scott Tattersall is lead developer of stock alerts, stock charts, and market sentiment for Zignals

2 comments:

Hi Scott,

why aren't you using memcached?

Why you have created your own solution?

Thx

Peter Gfader

Hi Peter,

Mainly because when we built the caching we weren't thinking about building a caching solution itself, we just wanted a quick, integrated solution tailored to solving a specific problem (lots of calculated financial data we didn't want to recalc from raw db data). So we didn't need a caching solution in general (though it turned out to be used for more than just financial data in the end).

Thanks for the comment - we'll have a look at memcached and see how much faster it is and if we can use at as a generic cache as well as for our specific purposes.

Thanks,

Scott.

Post a Comment